Unlocking a Just AI Transition

Article In The Thread

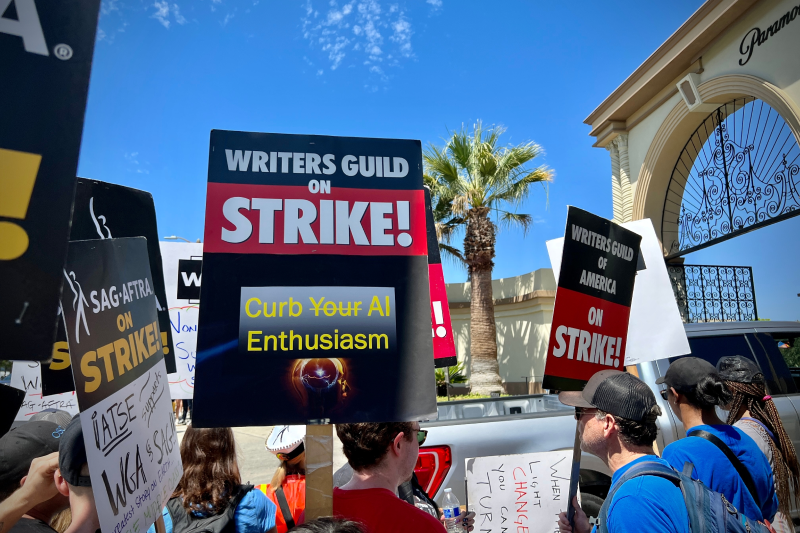

vesperstock / Shutterstock.com

Oct. 31, 2023

Nearly a year after OpenAI’s release of ChatGPT sparked an ongoing explosion of public interest and fear in generative artificial intelligence (AI), governments are finally coming together to figure out how to manage what Joe Biden called the enormous “potential” and “peril” of AI — impacts that will greatly affect the global economic landscape. On November 1, the UK will gather leaders from governments, academia, and industry for a high-level international AI Safety Summit. Similar processes are underway at the UN, G7, and the Organization for Economic Cooperation and Development (OECD). China also recently announced it was launching a Global AI Governance Initiative involving some 155 nations that participate in Beijing’s Belt and Road Initiative.

It is early days still, but so far much of these high-level, global AI governance conversations focus on a narrowly defined conception of AI safety (i.e., hypothetical scenarios in which AI systems fall into the hands of malicious actors or escape human control). Such concerns are valid and should be addressed. But if society is to avoid the most urgent and likely harms from AI then global governance needs to focus not just on AI safety, but on AI justice.

AI and Inequality

A major risk in the growing field of AI is its potential to reinforce existing injustices and widen inequalities. In fact, it already is. A long list of technologists, researchers, and scholars have documented how biases in machine-learning systems harm marginalized or minority populations, whether in healthcare, hiring processes, or policing. The problem is no doubt as acute in low- and middle-income countries, where budget-constrained governments are keen to adopt machine-learning tools but where institutional and civil society capacity to identify and mitigate bias is weaker.

AI also stands to worsen economic disparities and dislocations. Already, algorithmic mediation dehumanizes many jobs and limits the prospects for workers to grow and advance, entrenching the chasm between labor and management. If guided solely by profit motive, firms will use powerful new AI systems in ways the writer Ted Chiang likens to a “management consultant” — to dispassionately maximize efficiency and profits by cutting workers with little regard for the social costs.

The effects of AI on labor markets in the developing world are even more pronounced. As journalists have reported, in the world of generative AI, the workers first on the chopping block are the millions employed in the business process outsourcing industry. Call center workers in the Philippines, IT troubleshooters in India, and copy writers in Kenya all face displacement by chatbots.

New jobs are being created in the developing world to perform the data annotation and content moderation work needed to train machine-learning models. Millions are reportedly employed in such work, and Google has said the number could reach a billion in the coming years. But these jobs are tedious, precarious, low-wage, and prone to labor and human rights abuses. The situation is emblematic of the divide that will only yawn wider: As rich nations build and capture the bulk of the proceeds of exponentially advancing AI systems, a growing underclass in developing nations will perform the menial work required to develop and maintain those systems.

Of the nearly 300 large language models in the world right now, half were built in the United States and 40 percent were built in China. On its current path, a handful of rich nations stand to capture the lion’s share of the proceeds from generative AI. McKinsey projects that by 2030 rich nations could see up to five times the net economic benefit from AI as developing nations.

“If society is to avoid the most urgent and likely harms from AI then global governance needs to focus not just on AI safety, but on AI justice.”

Left unaddressed, these disparities and dislocations are sure to fuel populism, migration, and social conflict on a scale unprecedented in the digital age, in which extreme concentrations of power and power asymmetries are already causing harm and upheaval.

A Just AI Transition

When it comes to thinking about how to govern AI, the duality policymakers should consider is not so much “potential” and “peril” but more “haves” and “have nots.” In practice, that means making justice a central priority for AI governance.

Much urgent work has been done on algorithmic justice and in identifying principles and interventions to push AI systems toward greater equity and fairness. When it comes to global governance, there are a few principles to start with. One is inclusion. At a minimum, developing countries should have an equal seat at the table in global AI governance processes and bodies. Even if they are not building cutting-edge AI systems, societies in the developing world will experience the impacts of those systems and thus have a stake in how they are regulated.

At the same time, a just AI transition will only be possible if global power disparities are reduced. That will require that developing countries have access to cutting-edge AI models and can build the capacity to safely tailor them to local context and needs. A team of researchers at DeepMind have proposed a new Frontier AI Collaborative that could enable the shared use of high-quality datasets and foundation models. Beyond that, philanthropies, foundations, and rich-world governments, acting through the World Bank, IMF, and national development finance bodies, should marshal financing for connectivity, compute, and data in developing countries. This support will assist these countries in building their own AI ecosystems and markets.

Finally, justice depends on accountability. Right now, there are few ways to hold an AI system, or the company that created it, to account for harms it might cause. In addition to creating national regulations that hold corporations accountable for the actions of the systems they build, we’ll need international mechanisms or bodies along the lines of the Bank for International Settlements or the World Trade Organization that can hear claims and provide some means of redress.

We are still at the beginning of what will be a long road to global AI governance. But the priorities and frameworks international policymakers set now will determine the direction of travel on this road for years to come. If we want AI to be a technology that benefits all, then justice must be at the center of our efforts to govern it.

You May Also Like

AI for the People, By the People (The Thread, 2023): AI is everywhere, from writing emails to taking headshots, this new co-gov model activates the general public to weigh in on how AI is used.

Governing the Digital Future (Planetary Politics, 2023): A new report analyzes the global dynamics of power and governance in the digital domain.

In the AI Age, Data Literacy Should Be a Human Right (Planetary Politics, 2023): We must prioritize literacy as a basic cornerstone of functioning in human society, as we navigate our digital realities and leverage emerging technologies.

Follow The Thread! Subscribe to The Thread monthly newsletter to get the latest in policy, equity, and culture in your inbox the first Tuesday of each month.